Research Projects

Rendering Specular Microgeometry with Wave Optics

Simulation of light reflection from specular surfaces is a

core problem of computer graphics. Most existing solutions

either make the approximation of providing only a large-area

average solution in terms of a fixed BRDF (ignoring spatial

detail), or are based only on geometric optics (which is an

approximation to more accurate wave optics), or both. We

design the first rendering algorithm based on a wave optics

model, but also able to compute spatially-varying specular

highlights with high-resolution detail. We compute a wave

optics reflection integral over the coherence area; our

solution is based on approximating the phase-delay grating

representation of a micron-resolution surface heightfield

using Gabor kernels. Our results show both single-wavelength

and spectral solution to reflection from common everyday

objects, such as brushed, scratched and bumpy metals.

You can download the SIGGRAPH

2018 paper (PDF), video

(MP4), supplemental

material (Zip Archive) and more from

Lingqi Yan's

page.

|

Predicting Appearance from Measured Microgeometry of

Metal Surfaces

The visual appearance of many materials is created by

micro-scale details of their surface geometry. In this

paper, we investigate a new approach to capturing the

appearance of metal surfaces without reflectance

measurements, by deriving microfacet distributions directly

from measured surface topography. Modern profilometers are

capable of measuring surfaces with sub-wavelength resolution

at increasingly rapid rates. We consider both wave- and

geometric-optics methods for predicting BRDFs of measured

surfaces and compare the results to optical measurements

from a gonioreflectometer for five rough metal samples.

Surface measurements are also used to predict spatial

variation, or texture, which is especially important for the

appearance of our anisotropic brushed metal samples.

Profilometer-based BRDF acquisition offers many potential

advantages over traditional techniques, including speed and

easy handling of anisotropic, highly directional materials.

We also introduce a new generalized normal distribution

function, the ellipsoidal NDF, to compactly represent

non-symmetric features in our measured data and texture

synthesis.

The project

page has links to download the paper (ACM Transactions

on Graphics 2015) and related materials, now updated with

the measured data and ellipsoid importance sampling. |

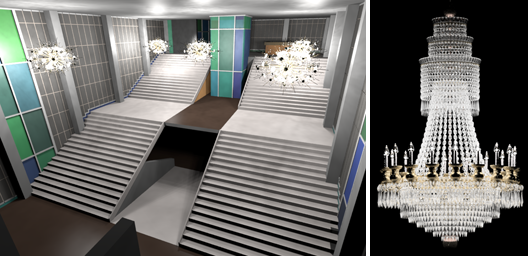

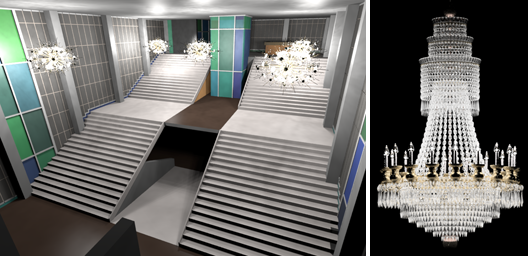

Complex Luminaires: Illumination and Appearance

Rendering

Simulating a complex luminaire, such as a chandelier, is

expensive. Prior approaches cached information on an

aperture surface that separates the luminaire from the

scene, but many luminaires have large or ill-defined

apertures leading to excessive data storage and inaccurate

results. In this paper, we separate luminaire rendering into

illumination and appearance components. A precomputation

stage simulates the complex light flow inside the luminaire

to generate two data structures: a set of anisotropic point

lights (APLs) and a radiance volume. The APLs represent the

light leaving the luminaire, allowing its near- and

far-field illumination to be efficiently computed at render

time. The luminaire's appearance consists of high- and

low-frequency components which are both visually important.

High-frequency components are computed dynamically at render

time, while the more computationally expensive low-frequency

components are approximated using the precomputed radiance

volume.

You can download the

paper (PDF, ACM Transactions on Graphics 2015) here.

|

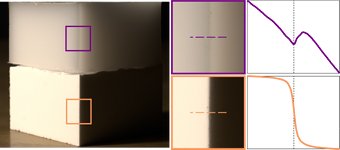

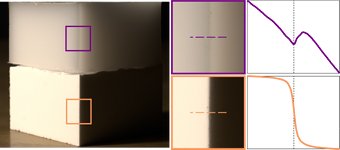

On the Appearance of Translucent Edges

This project investigated the visual appearance of edges on

translucent objects: how they differ from opaque edges, what

causes their unusual features, and how it relates to

material properties.

The project

page has links to download the paper (CVPR 2015) and

related materials. |

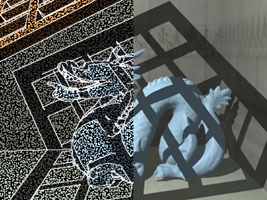

Bidirectional Lightcuts

Scenes modeling the real-world combine a wide variety of

phenomena including glossy materials, detailed heterogeneous

anisotropic media, subsurface scattering, and complex

illumination. Predictive rendering of such scenes is

difficult; unbiased algorithms are typically too slow or too

noisy. Virtual point light (VPL) based algorithms produce

low noise results across a wide range of

performance/accuracy tradeoffs, from interactive rendering

to high quality offline rendering, but their bias means that

locally important illumination features may be missing. We

introduce a bidirectional formulation and a set of weighting

strategies to significantly reduce the bias in VPL-based

rendering algorithms. Our approach, bidirectional

lightcuts, maintains the scalability and low noise

global illumination advantages of prior VPL-based work,

while significantly extending their generality to support a

wider range of important materials and visual cues. We

demonstrate scalable, efficient, and low noise

rendering of scenes with highly complex materials

including gloss, BSSRDFs, and anisotropic volumetric

models.

You can download a SIGGRAPH

2012 preprint paper (pdf) here.

The SIGGRAPH presentation slides are available in keynote format or as

exports to pdf and powerpoint.

|

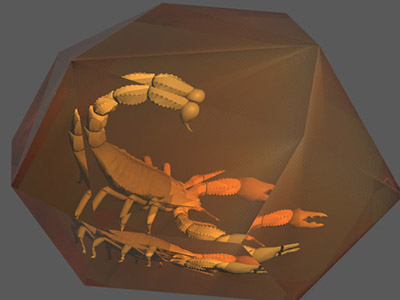

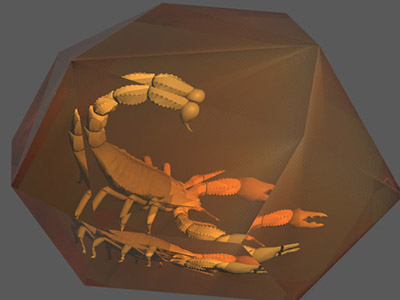

Single Scattering in Refractive Media with Triangle Mesh

Boundaries

Light scattering in refractive media is an important optical

phenomenon for computer graphics. While recent

research has focused on multiple scattering, there has been

less work on accurate solutions for single or low-order

scattering. Refraction through a complex boundary

allows a single external source to be visible in multiple

directions internally with different strengths; these are

hard to find with existing techniques. This paper presents

techniques to quickly find paths that connect points inside

and outside a medium while obeying the laws of refraction.

We introduce a half-vector based formulation to support the

most common geometric representation, triangles with

interpolated normals; hierarchical pruning to scale to

triangular meshes, a solver with strong accuracy guarantees,

and a faster method that is empirically accurate. A

GPU version achieves interactive frame rates in several

examples.

You can download a

SIGGRAPH 2009 preprint paper (pdf) and a quicktime movie

here.

The presentation slides are available in keynote, pdf, or powerpoint + movies formats.

|

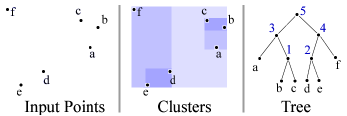

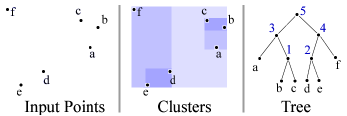

Fast Agglomerative Clustering

This paper describes how to build binary trees using

agglomerative (or bottom-up) construction quickly and

evaluates the quality of the trees compared to divisive (or

top-down) construction in two applications: BVH trees for

ray tracing and light trees for Lightcuts. We

introduce a novel faster unordered agglomerative clustering

algorithm for use when the clustering function is

non-decreasing. Our results indicate that the

agglomerative-built trees generally have higher quality

though the build times are a little slower than the fastest

divisive approaches.

You can download a preprint

of the IRT08 paper (pdf) here.

The presentation slides are available in powerpoint and pdf formats.

| Several people asked if a

divisive builder that tries splits on all three

axes rather than just the longest one would make a

significant difference. I tried this and SAH

estimated cost results (see figure 6) are shown in

this table. The quality improved at the cost

of slower builds but still does not match the

agglomerative quality. The maximum depth of

each BVH is shown in paranthesis () with

agglomerative trees tending to be a little deeper

than the divisive ones. |

Model

|

Kitchen

|

Tableau

|

GCT

|

Temple

|

Divisive - 1 axis

|

(32)

46.1

|

(31) 17.7

|

(35) 70.5

|

(42) 29.4

|

Divisive - 3 axis

|

(30) 43.5

|

(29) 17.4

|

(33) 62.7

|

(41) 28.2

|

Agglomerative

|

(60) 36.1

|

(39) 15.5

|

(44) 54.0

|

(65) 22.6

|

|

|

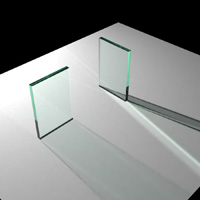

Microfacet Refraction

This work extends microfacet theory (eg, Cook-Torrance

models) to handle refraction through rough surfaces such as

etched glass globe shown. We also discuss the Smith

shadowing-masking approximation for general microfacet

distributions and good importance sampling strategies (which

is essential when simulating transmitted light). Published

at EGSR 2007. You can download

the EGSR 2007 paper (pdf) here.

Erratum: Fixed typo in equation 40 (eta should be

eta^2 inside the square root)

|

Multidimensional Lightcuts

This work extends the approach and concepts of Lightcuts to

scalable rendering of complex effects such as motion blur,

depth of field, and volume rendering. We achieve greater

scalability by holistically considering complete pixel

integrals and using an implicit hierarchy called the product

graph.

You can download the SIGGRAPH 2006 paper

(pdf) and a movie from the

The SIGGRAPH presentation slides are available in powerpoint or pdf formats, and a separate

archive of clips on the movies page. (Note: the powerpoint file was

created on a Macintosh computer and some of the graphs do

not seem to display properly under Windows.)

|

Lightcuts: A Scalable Approach to Illumination

Computing the illumination from complex sources such as area

lights, HDR environment maps, and indirect, can be very

expensive. Lightcuts introduces a new scalable

algorithm for computing the illumination from thousands or

millions of point lights. Using a perceptual metric, a

binary light tree of clusters, and conservative per-cluster

error bounds, we show that we can accurately compute the

illumination from hundreds of point lights using only

hundreds of shadow rays. A lightcut is cut through the

global light tree that adaptively partitions the lights into

clusters to locally control the cost vs. error

tradeoff. We also introduce a related technique called

reconstruction cuts that uses the lightcut framework to

further reduce shading costs by intelligently interpolating

illumination while preserving high frequencies details like

shadow boundaries and glossy highlights.

You can download the SIGGRAPH 2005

paper (pdf) (Note:

several figures were printed incorrectly in the

proceedings but are correct in the PDF) and a movie

from the

The SIGGRAPH presenation slides are available in powerpoint or pdf formats.

A related technical report

discusses several important implementation issues for the

Ward BRDF including the correct sampling weights that should

be used when using it associated Monte Carlo sampling

distribution.

|

Perceptual Illumination Components

Different components of global illumination have widely

varying costs, but may not provide the same benefits to

image quality. We performed a user study to examine this

issue. We divided the illumination into several components

based on the BRDF (indirect diffuse, indirect gloss,

indirect specular), rendered images with different subsets

of the illumination. We then had people rank the images and

studied the results. What we found is the components had

differing affects on user-ranking (though indirect diffuse

was usually the most important), but that we could predict

their importances quite accurately using a simple model and

some simple image/material statistics. We think this can

provide insight for creating more efficient rendering

systems in the future.

Published in SIGGRAPH 2004. More information can be found on the first

author's page. |

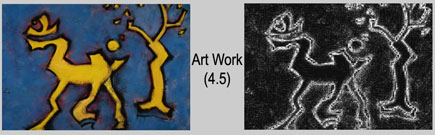

Feature-Based Textures

By adding explicit features (eg, discontinuity edges) to a

standard raster-based textures, we can increase image

quality, especially during magnification, with only minimal

extra storage. Features can be automatically extracted

from vector format images, approximated for raster images,

or hand annotated.

Published in EGSR 2004. More information can be found on this

project page. |

Combining Edges and Points

By combining edges (explicit discontinuities) and points

(shading samples), we are able to reconstruct high quality

images from relatively sparse data. By computing the exact

locations of discontinuities, we are able to interpolate the

sparse data without smoothing out these sharp features and

we also show that knowing the subpixel location of the edges

allows to compute good quality anti-aliasing almost for free

and without supersampling. Our software implementation runs

at interactive rates on the CPU and future versions should

be able to exploit the GPU for even faster framerates.

Published SIGGRAPH 2003. More information can be found on this project

page.

A

GPU-based version was published at Graphics Interface

2006. |

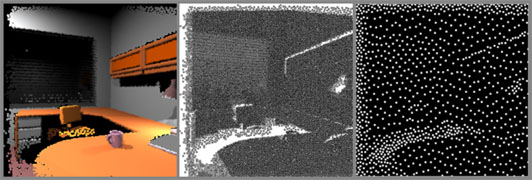

Render Cache for Interactive Rendering

During an interactive session it is not necessary (and perhaps not

even desirable) to render every pixel of every frame.

Especially when using more expensive rendering methods such as

global illumination. In most cases, there is considerable

coherence from one frame to the next which we can exploit to reduce

our computational expense. Also, in an interactive session,

small visual artifacts are acceptable as long as they are small,

fixed quickly, and do not distract the user. In rendering

methods such as ray tracing or global illlumination, the cost of

renderering a pixel various greatly depending on various factors

such as material, local geometry, and complexity of the local

lighting. Thus rerendering every pixel would make the

framerate either highly variable which is far more objectionable in

an interactive session than having some minor visual artifacts would

be.

The render cache enables interactive display by caching rendered

results as colored 3D points, reprojecting them to estimate the

current image, and automatically deciding which subset of pixels

should be (re)rendered for future frames. Because the image

generation and pixel (or ray) rendering happen asynchronously, we

can guarantee a fast consistent framerate regardless of rendering

cost (though renderering cost does affect visual quality).

In our experiments we have found that we get very good visual

quality when only one tenth the pixels are rendered per frame and

acceptable quality when only one hundreth of the pixels are

renderered per frame (the rest of the pixels are estimated by

reprojecting older results).

This work was begun while I was a post-doc at the iMAGIS lab in

Grenoble, France and continued at Cornell.

You can view a project page here

(including a link to the Eurographics Rendering Workshop '99 paper ). (Powerpoint presentation)

A followup paper, Enhancing

and

Optimizing the Render Cache, appeared in EGRW 2002. (Powerpoint presentation)

A

GPU-based version was published at Graphics Interface 2006.

There is also a

web-downloadable binary so that you can try it out for

yourself or download the C++ source code under GPL license and

compile it yourself.

Using Perceptual Texture Masking for Efficient Image Synthesis

This work described a simple and fast way to precompute the

perceptual masking ability of textures (stored as threshold

elevation factors) and store them with the texture. This

allows them to be used during interactive computations to allow

higher error thresholds in textured regions with almost no runtime

overhead.

Published at Eurographics 2002. You

can get the paper here.

Density Estimation for Global Illumination

I investigated particle tracing and density estimation as a method

to compute radiosity solutions on large environments. Traditional

radiosity methods have been limited by their large memory

requirements. The density estimation method uses much less memory

because it can efficiently use secondary storage (e.g. disk) for

most its storage needs. It can handle all transport paths (arbitrary

BRDF) and it nicely decomposes the global illumination problem into

three pieces: particle tracing, density estimation, and mesh

decimation.

You can get my thesis here

.

You can get our Transactions on Graphics 1997 paper here

.

You can get our Eurographics Rendering Workshop '95 paper here

.

Fitting Virtual Lights for Non-Diffuse Walkthroughs

The essence of this work is to find ways to encode non-diffuse

illumination solutions for rapid display using standard 3D graphics

interfaces (e.g. OpenGL) and hardware. We want to be able to

use a sophisticated lighting algorithm such as global illumination

with arbitrary material properties, but we also want to be able to

display the results quickly enough for interactive

walkthrough. Current realtime graphics interfaces and hardware

only implemented a relatively primitive local shading model.

Phong shading and perhaps some shadows are about the limit of their

abilities. We investigate the us of these basic primitives as

a set of "appearance basis" functions that can be used to

approximate the appearance computed by some more accurate, complete,

and general model.

You can get our SIGGRAPH '97 paper

here .

Path-Buffer

The path-buffer is a modified z-buffer with two z values per

pixel. The extra z value allows us to use the the path-buffer and

scan conversion to trace rays in a path tracing style algorithm.

This reformulated algorithm is designed to be easy to accelerate

using scan conversion and z-buffer type hardware. More details can

be found in:

- "The Path-Buffer" - Technical Report PCG-95-4

- Bruce Walter and Jed Lengyel

Here are pdf versions of the technical

report and figures.

I implemented the path-buffer on the simulator for UNC's PixelFlow

machine as proof of concept with the goal of interactive path

tracing in simple environments. But then I got distracted by

working on my dissertation and have not worked on the path buffer

since.

Other projects

Iterative Adaptive Sampling

- "Accurate Direct Illumination Using

Iterative Adaptive Sampling" - IEEE TVCG June 2006

- Michael Donikian, Bruce Walter, Kavita Bala, Sebastian

Fernandez, and Donald P. Greenberg

Analyzing Ray Casting and Particle Tracing

Rays are used as a primitive in many different rendering algorithms,

but unfortunately the costs of ray casting are not very well

understood. In order to better understand the costs of the particle

tracing phase of our density estimation radiosity algorithm, we are

working on analyzing the costs of ray casting and the variance of

particle tracing.

- "Cost Analysis of a Monte Carlo Radiosity Algorithm" -

Technical Report PCG-95-3

- Bruce Walter and Peter Shirley

I don't have the source for the original report anymore, but here is

a pdf version of an updated, though not quite finished, version of

this technical report.

Email errors, corrections, or comments to "bruce.walter AT

cornell.edu"

Back to my home

page

Back to the Cornell

Program of Computer Graphics